Generative AI: Making Understanding (Part I)

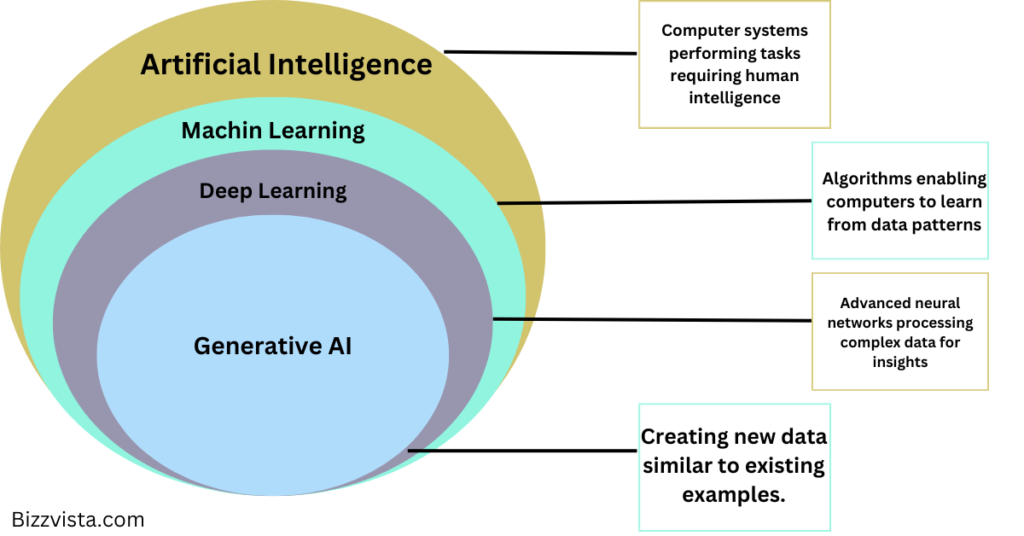

Generative AI is a part of the artificial intelligence field that makes use of deep learning algorithms to create human-like content which can be in the form of text, images, and many more types of data. These models train on a vast amount of data, and they produce outputs that are based on statistics that are plausible on the basis of that data. The result would not be copied exactly but the content will be comparable, so the system will be able to create new content.

The Evolution of Generative AI

Generative AI, a part of AI technology for long, has gained momentum with the recent progress. With the previous major advance in generative AI being seen in the area of computer vision, where AI could change selfies into Renaissance-style portraits. At present, there is a main focus on natural language processing, and large language models capable of producing text on virtually any theme, from poetry to software code, are being developed. The deep generative models, like variational autoencoders (VAEs), have played a vital role in this progress. VAEs, which were introduced in 2013, was the first deep-learning model to be used on a wide scale for image and speech synthesis that is realistic. They operate by condensing unlabeled data into a compressed representation and then decoding the output back into its original form with the ability to create variations on the original data.

The Transformer Revolution

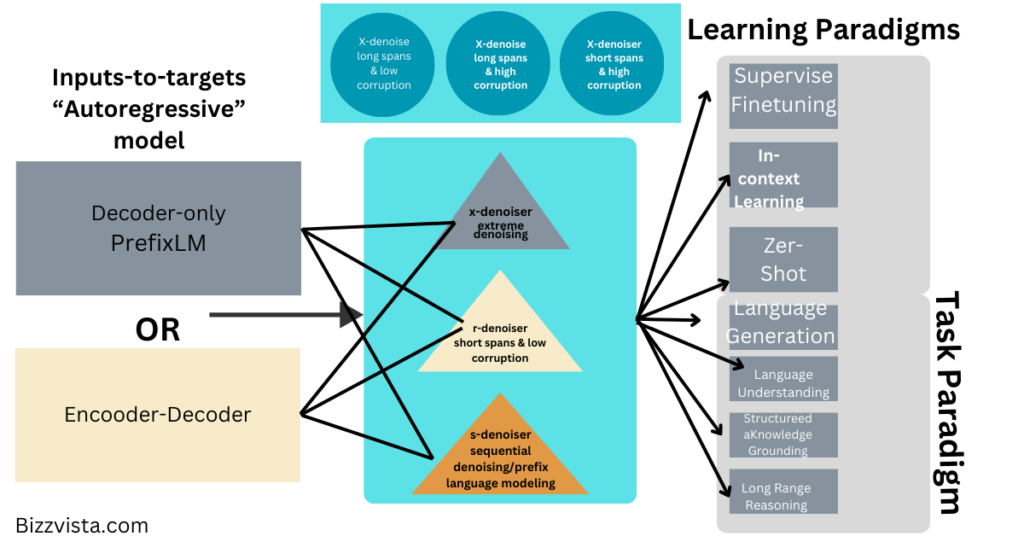

In 2017, introducing the transformers by Google, the language models training process was revolutionized. The transformer is an encoder-decoder architecture which has two parts: the encoder which converts the raw unannotated text into the embeddings and the decoder that predicts every word in a sentence using the embeddings. This technique makes the transformers able to learn how the words and the sentences behave in relation to each other. This in turn creates a strong language representation since they do not need to be labeled explicitly to know their grammatical features.

Transformers have the ability to process words in a sentence all at once, allowing for parallel processing and speeding up training. They also learn the positions of words and their relationships, providing context that allows them to infer meaning and disambiguate words in long sentences.

Types of Language Transformers

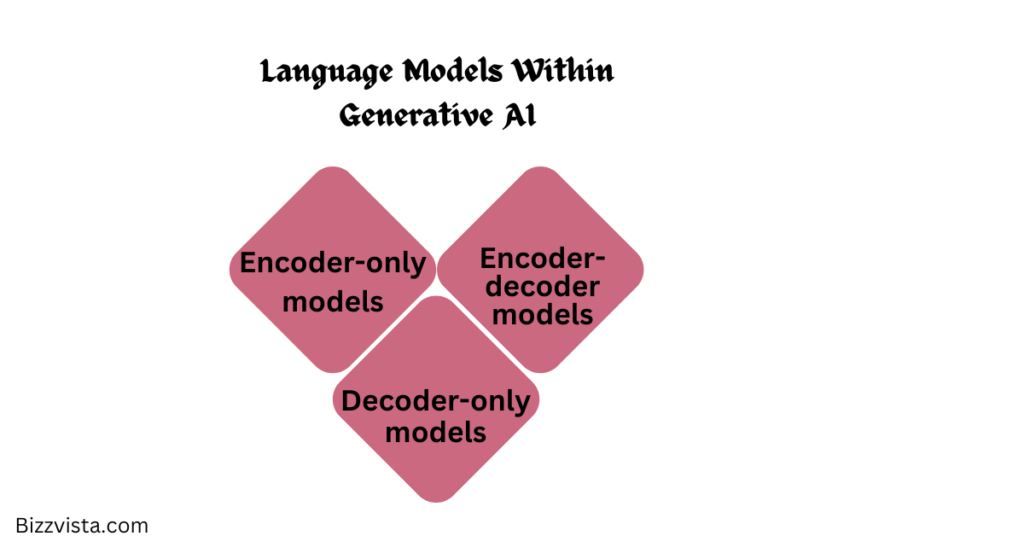

Language transformers can be categorized into three main types: encoder-only models, decoder-only models, and encoder-decoder models.

- Encoder-only models, like BERT, are widely used for non-generative tasks like classifying customer feedback and extracting information from long documents. They power search engines and customer-service chatbots, including IBM’s Watson Assistant.

- Decoder-only models, like the GPT family of models, are trained to predict the next word without an encoded representation. GPT-3, released by OpenAI in 2020, was the largest language model of its kind at the time, with 175 billion parameters.

- Encoder-decoder models, like Google’s Text-to-Text Transfer Transformer (T5), combine features of both BERT and GPT-style models. They can perform many of the generative tasks that decoder-only models can, but their compact size makes them faster and cheaper to tune and serve.

The Role of Supervised Learning in Generative AI

While generative AI has largely been powered by the ability to harness unlabeled data, supervised learning is making a comeback. Developers are increasingly using supervised learning to shape our interactions with generative models. Instruction-tuning, introduced with Google’s FLAN series of models, has enabled generative models to assist in a more interactive, generalized way. By feeding the model instructions paired with responses on a wide range of topics, it can generate not just statistically probable text, but humanlike answers to questions or requests.

The Power of Prompts and Zero-Shot Learning

The use of prompts, or initial inputs fed to a foundation model, allows the model to be customized to perform a wide range of tasks. In some cases, no labeled data is required at all. This approach, known as zero-shot learning, allows the model to perform tasks it hasn’t explicitly been trained to do. To improve the odds the model will produce what you’re looking for, you can provide one or more examples in what’s known as one- or few-shot learning. These methods dramatically lower the time it takes to build an AI solution, since minimal data gathering is required to get a result.

Overcoming the Limitations of Zero- and Few-Shot Learning

Despite the power of zero- and few-shot learning, they come with a few limitations. Many generative models are sensitive to how their instructions are formatted, which has inspired a new AI discipline known as prompt-engineering. Another limitation is the difficulty of incorporating proprietary data, often a key asset. Techniques like prompt-tuning and adaptors have emerged as alternatives, allowing the model to be adapted without having to adjust its billions to trillions of parameters.

The Future of Generative AI

The future of generative AI is likely to be influenced by several trends. One is the continued interest in the emergent capabilities that arise when a model reaches a certain size. Some labs continue to train ever larger models chasing these emergent capabilities. However, recent evidence suggests that smaller models trained on more domain-specific data can often outperform larger, general-purpose models. This suggests that smaller, domain-specialized models may be the right choice when domain-specific performance is important.

Another trend is the emerging practice of model distillation, where the capabilities of a large language model are distilled into a much smaller model. This approach calls into question whether large models are essential for emergent capabilities. Taken together, these trends suggest we may be entering an era where more compact models are sufficient for a wide variety of practical use cases.

The Risks of Generative AI

While generative AI holds enormous potential, it can also introduce new risks, be they legal, financial or reputational. Many generative models can output information that sounds authoritative but isn’t true or is objectionable and biased. They can also inadvertently ingest information that’s personal or copyrighted in their training data and output it later, creating unique challenges for privacy and intellectual property laws. Solving these issues is an open area of research.